Is Your Job's Data Science Tech Stack Intimidating?

Written by Matt Dancho

So many tools to worry about. So few you'll actually use.

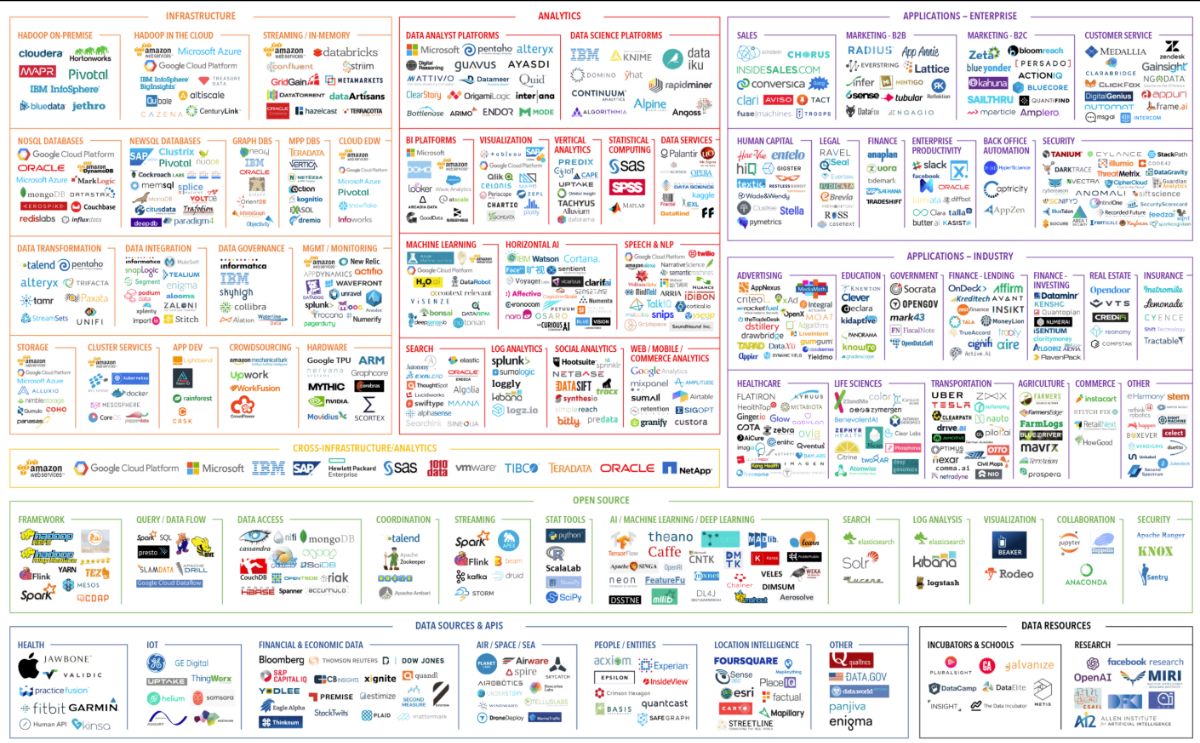

Image Credit: Big Data Landscape 2017

Learning data science is challenging. You need to learn code, you need to learn algorithms, you need to understand business problems, you need to learn databases… the list goes on and on. It’s so much that it overwhelms us.

There are a ton of data scientists suffering from imposter syndrome due to the massive list of tools and feeling like they can never learning everything.

__________________

I was chatting with a student yesterday about his new job, which both of us could not be more thrilled about.

He was explaining that he’s equally excited and nervous about his new Data Science Setup at work, which is quite a bit more complex than his home setup.

At home, he’s used to using RStudio Desktop and reading CSV Files. He’s connected to a SQL Server once.

In his new role, his work setup is much more complex.

He has to:

-

Load an RStudio Server PRO instance from JupyterHub

-

GIT commit to Bitbucket

-

Access to RStudio Connect for sharing dashboards & reports

-

Use Jenkins for continuous integration between Bitbucket and RStudio Connect

-

AND, he needs to connect everything via ODBC to different SAP Business Ops Databases

This new reality - where the business has 50+ Tools that all need to be integrated.

And, this can be INTIMIDATING.

But don’t worry - You’ve got this!

And, I have a few tips to help along the way.

Tip #1 - Real-world adds complexity, but fundamentals stay the same

His setup includes several technology buzzwords:

-

“ODBC” - This is just how we connect to a SQL database

-

“Bitbucket” - Version control

-

“Jenkins” - An automated tool that checks your code by running tests that you set up

-

“SAP” - Business Enterprise Resource & Planning (ERP) system - Just a big database

Don’t be scared of these. The buzzwords are based on fundamental concepts:

-

Connecting to data - Databases and CSVs are fundamentally no different. They are both ways of collecting and storing data.

-

Storing code in a repository - If you’ve ever made a GitHub repo to house your code for a portfolio or school project, you’ve got some experience

-

Testing your code - If you’ve ever made an example to test a function, then you’ve created a test. Sure you may not have automated it with Jenkins, but the tough part (the test) is something you are familiar with.

Tip #2 - Learn one technology & switching becomes very easy

Chances are that the tools you use 1 year from now are going to be completely different than the ones you are using now.

Adapting is just the practice of learning something similar, then switching when needed.

Here are some tools that you can learn one of then switch when needed:

-

R, Python - These are the main coding languages for data science. Learn one, then switch back and forth.

-

GitHub, BitBucket - These are tools for version control & code repository management. Learn one, then you know both.

-

AWS, Azure, Google Cloud Platform - These are enterprise cloud servers & IT infrastructure. If you learn to use one, then you can quickly transfer your skills to the other.

Tip #3 - Focus on business value through data science

Companies are going to have 50+ different tools that all need to be connected.

Trying to memorize how to use this combination of tools is not the best use of your valuable time.

Rather, learn how to generate business value through data science.

This is a transferable skill set that works in any situation, regardless of tools.

What should you learn? Try these:

-

Working with messy data

-

Creating visualizations & explaining the story

-

Applying the right algorithms for the predictive task

-

Measuring accuracy/error & communicating uncertainty

-

Measuring financial results & communicating cost/benefits

-

Building applications that help distribute the technology in a form that your organization can use business-wide

-

When you build this core set of skills, you then have the power to help your organization regardless of what set of tools they put in place.

Moving Forward

THINGS YOU NEED TO DO TO BECOME AN EXPERT:

-

Do the REPS - repeatedly typing & applying code.

-

Do the WORK - actually completing projects that matter.

-

Solve Problems by Googling - Don’t memorize. Google.

-

Learn by TRIAL AND ERROR - When you fail, you learn more than when you succeed.

I look forward to providing you the best data science for business education.

Matt Dancho

Founder, Business Science

Lead Data Science Instructor, Business Science University